Executive Summary

Southeast Asia is rapidly adopting Generative AI (GenAI) and Large Language Models (LLMs), unlocking new business opportunities while introducing new risks. Many organizations struggle with AI governance, trying to control every variable. However, a more effective approach is to build flexible systems that can anticipate, respond to, and recover from risks.

This principle was the core message at an event by The Generative Beings (TGB), SUPA, and Antler, where experts discussed how businesses can move beyond rigid control mechanisms toward adaptable AI governance. The key takeaways below highlight practical strategies for ensuring AI safety, resilience, and compliance in an evolving landscape.

Key Takeaways:

- AI Risk Management Evolution – Move from reactive to proactive strategies with continuous monitoring, adaptable compliance, and ethical oversight.

- Preventing Data Leaks – Address risks from unintentional employee actions, prompt injection attacks, and misconfigurations with strong security protocols.

- Building Safety Guardrails – Implement input filtering, output moderation, and access controls to create resilient AI systems.

- Navigating Regulatory Compliance – Stay ahead of evolving laws like the EU AI Act by prioritizing transparency, fairness, and data protection.

- AI Risk Assessment – Establish governance frameworks with scenario-based testing and continuous risk evaluations.

Introduction

Southeast Asia is emerging as a global AI innovation hub. However, as AI adoption accelerates, so do the risks. The traditional approach of trying to control every variable in AI systems is neither practical nor effective. Instead, businesses must focus on building flexible systems that can withstand uncertainties and recover from failures.

In a recent event by The Generative Beings (TGB), in collaboration with SUPA and Antler, AI experts and business leaders were brought together to explore this challenge. Sue Yen Leow from Cymetrics framed the discussion with a key insight: “Organizations need to stop viewing AI as a system that can be perfectly controlled. Instead, they should design governance models that can anticipate, respond to, and recover from risks.”

The following sections outline five critical insights from the event, offering a roadmap for AI safety and governance in Southeast Asia.

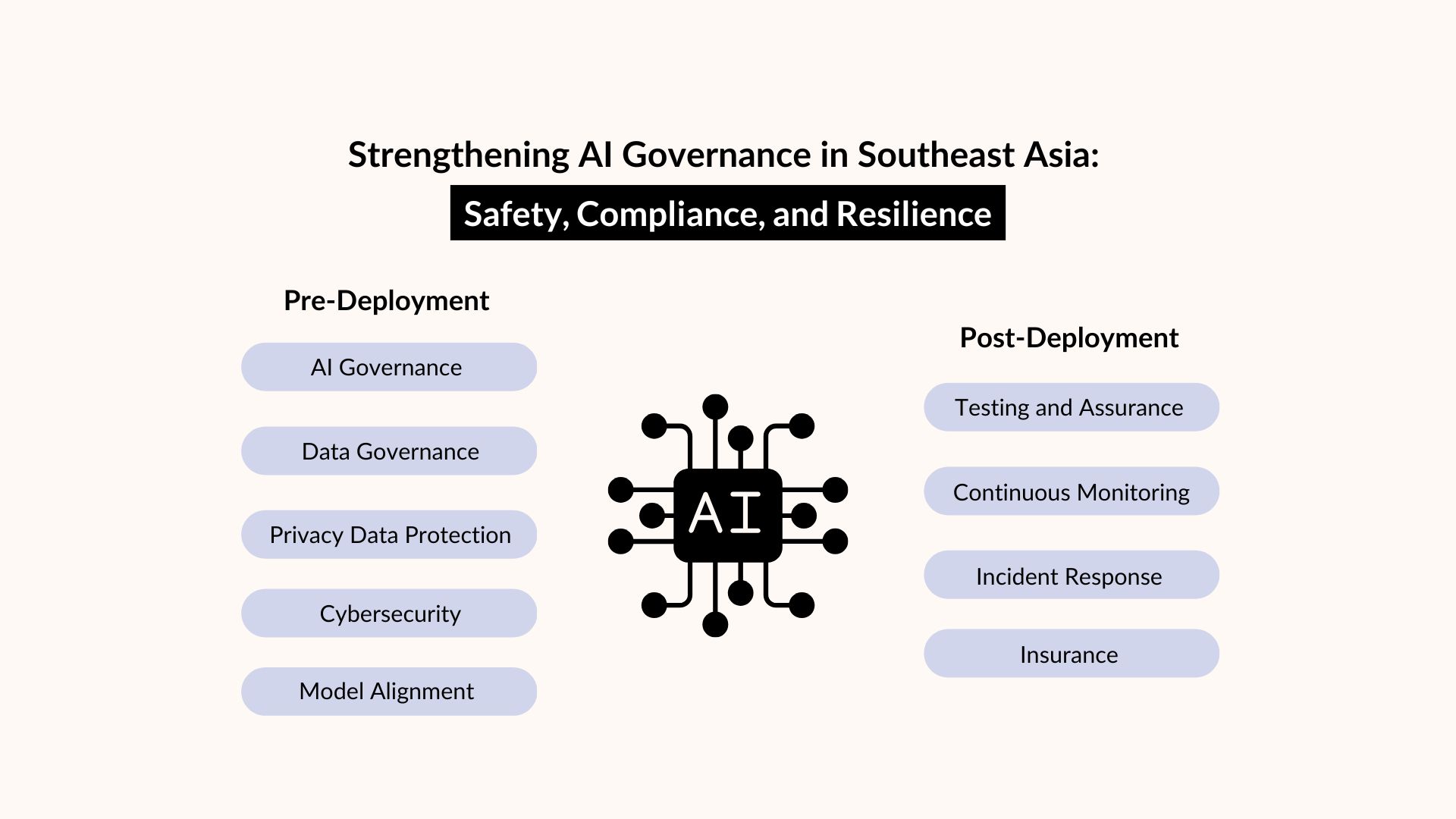

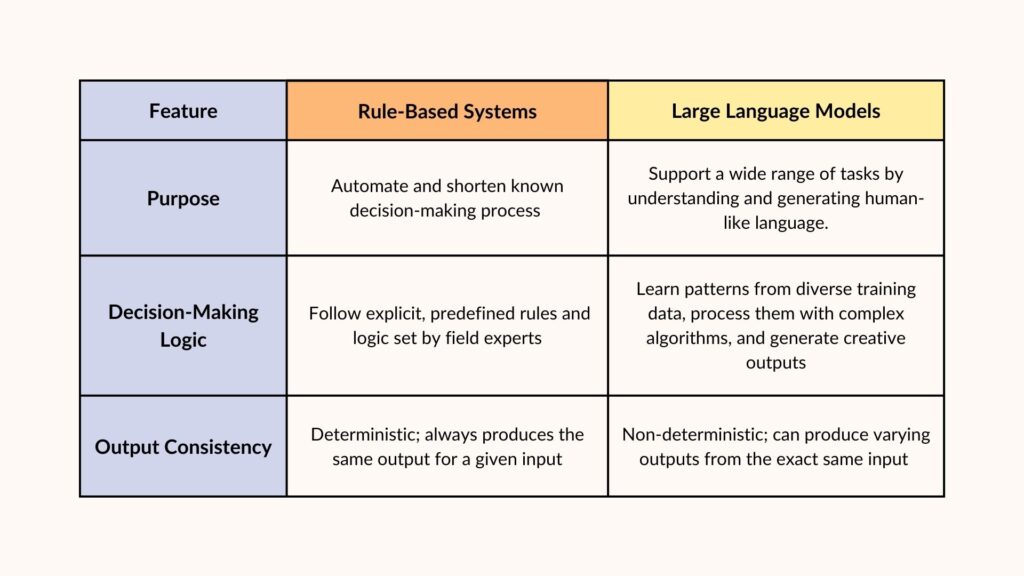

1. AI Risk Management: Shifting from Control to Adaptability

AI systems, particularly LLMs, don’t behave like traditional software. Unlike rule-based systems that follow predefined instructions, LLMs generate responses probabilistically, meaning outputs may vary. This shift requires a new approach to AI risk management—one that prioritizes adaptability over rigid controls.

Key Strategies for Adaptability:

- Continuous Monitoring – Use real-time tracking to detect anomalies and emerging risks.

- Dynamic Compliance – Align governance models with evolving regulations and ethical considerations.

- Incident Response Protocols – Implement recovery mechanisms to minimize the impact of AI failures.

Sue Yen Leow emphasized: “The goal isn’t to eliminate uncertainty—it’s to build systems that can handle the unexpected.”

To further illustrate the key differences between these two types of systems, consider the following comparison in Figure 1.1:

2. Data Leakage: A Growing Threat

Data leaks are among the biggest risks in AI deployment. In 2023, Amazon faced a major breach when employees inadvertently shared proprietary code via ChatGPT. AI safety isn’t just about cybersecurity—it’s about creating a culture of awareness and resilience.

Common Data Leakage Risks:

- Unintentional Employee Actions – Staff unknowingly inputting sensitive data into AI models.

- Training Data Contamination – Confidential information embedded in AI-generated outputs.

- Prompt Injection Attacks – Malicious inputs tricking AI into revealing sensitive data.

How to Prevent Data Leaks:

- Implement Input/Output Filtering – Block sensitive data before it reaches AI models

- Enforce Data Anonymization – Prevent AI from processing identifiable personal data

- Regular Security Audits – Proactively identify vulnerabilities before they escalate

- Employee Training Programs – Ensure responsible AI usage through education and awareness

3. Building Safety Guardrails for AI

Safety guardrails are essential for mitigating risks across the entire AI lifecycle. Instead of one-time risk assessments, organizations should build self-reinforcing safety mechanisms that evolve with AI applications.

Key Safety Measures:

- Input Filtering & Output Moderation – Prevent harmful or unintended AI-generated responses

- Access Control Systems – Limit AI access to authorized users and applications

- Continuous Testing & Red-Teaming – Proactively identify and fix vulnerabilities

- Audit Trails – Maintain transparent logs of AI decisions and system interactions

Sue Yen Leow stressed: “Testing isn’t a one-time task; it’s an ongoing process to keep AI safe and reliable.”

4. Navigating Regulatory Compliance: A Moving Target

As AI regulations evolve, businesses need governance models that can adapt to new legal frameworks without stifling innovation.

Key Compliance Areas:

- EU AI Act Readiness – Ensure transparency and risk assessments for high-risk AI applications

- Industry-Specific Regulations – Address sector-specific compliance in finance, healthcare, and security

- Data Protection Standards – Align with GDPR, PDPA, and regional privacy laws

- Documentation & Audit Trails – Maintain clear records for regulatory review and AI accountability

Proactive Compliance Strategy: Don’t wait for laws to change—build flexible governance structures now to stay ahead.

5. AI Risk Assessment: Preparing for the Unexpected

Risk management isn’t just about identifying threats—it’s about building systems that can recover from failures. Traditional risk models based on static checklists are no longer sufficient.

Elements of a Resilient Risk Framework:

- Scenario-Based Testing – Red-team exercises to stress-test AI models

- Real-Time Risk Monitoring – Detect emerging issues through automated oversight

- Adaptive Governance – Policies that evolve alongside AI advancements

- Transparent Stakeholder Communication – Keeping teams and regulators informed about AI risks and mitigation strategies

Sue Yen Leow concluded: “The future of AI safety is about resilience. Organizations that embed adaptability into their risk frameworks will be better equipped to handle the challenges ahead.”

Strategic Path Forward

Southeast Asia is uniquely positioned to lead in responsible AI development. The insights from this event highlight that AI safety isn’t just about minimizing risks—it’s about building better systems from the start.

By shifting away from rigid controls and embracing adaptive governance, businesses can:

- Build AI systems that anticipate, respond to, and recover from risks

- Stay compliant with evolving AI laws without hindering innovation

- Foster a culture of continuous learning and ethical AI adoption

Organizations like TGB, SUPA, Antler, and Cymetrics are demonstrating that AI governance isn’t about eliminating uncertainty—it’s about making AI both powerful and safe. By embracing flexibility, resilience, and proactive risk management, Southeast Asia can set a global benchmark for ethical AI innovation.

Need help navigating AI governance in Southeast Asia? SUPA offers expert guidance and solutions. Contact isaac@supa.so or visit our website.