SUPA is excited to announce the release of our Advent of Code Dataset on Hugging Face. This dataset is a game-changer for AI engineers and researchers looking to push the boundaries of Frontier AI in code generation. In this blog post, we dive into what makes this dataset unique, how it was built, and how you can integrate it into your projects.

The Vision Behind the Dataset

High-quality datasets are the backbone of high-performing AI systems. At SUPA, we are committed to delivering data that is both reliable and enriched with context. The Advent of Code Dataset is built on three core principles:

• Expanded: Featuring multiple solutions for each Advent of Code problem, providing diverse coding approaches.

• Curated: Every solution is carefully selected to ensure a rich and varied set of data points.

• Verified: Each solution is tested and confirmed functional, ensuring robustness in AI training.

This dataset is not just another collection of code snippets—it’s a comprehensive resource that emphasizes both the syntactic accuracy and the contextual depth of problem-solving critical for training modern language models.

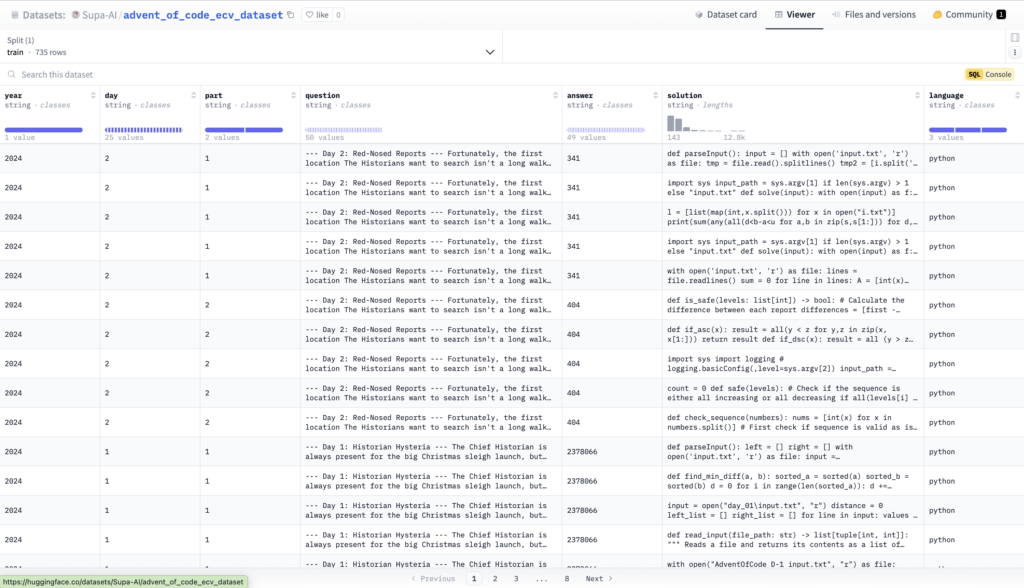

What’s Inside the Advent of Code ECV Dataset?

Diverse Programming Languages and Solutions

For the year 2024, our dataset includes solutions in:

• Python: 245 solutions

• JavaScript: 245 solutions

• Ruby: 245 solutions

At least five different solutions per part of the problem support each day’s challenge (from Day 1 to Day 25). This diversity ensures that models trained on this dataset can learn from various coding styles and approaches.

Rich Contextual Information

Every entry in the dataset includes:

• Problem Description: Detailed problem statements, which not only pose a challenge but also provide a narrative context. This helps in training models to understand and generate context-aware solutions.

• Solution Code: Tested and verified solutions that work out-of-the-box.

Example Data Instance

{

"Year": "2024",

"Day": "1",

"Part": "1",

"Question": "--- Day 1: Historian Hysteria ---\n\nThe Chief Historian is always present for the big Christmas sleigh launch... [full prompt truncated for brevity]",

"Answer": "2378066",

"Solution": "raw = open(\"i.txt\").readlines()\n\nl = sorted(int(x.split()[0]) for x in raw)\nr = sorted(int(x.split()[1]) for x in raw)\n\nprint(sum(abs(l[i] - r[i]) for i in range(len(l))))",

"Language": "python:3.9"

}

This structured format ensures that every problem has a complete narrative, verified solution, and necessary test cases, providing a comprehensive learning resource.

How We Built the Dataset

Data Collection, Cleaning, and Verification

Our dataset results from extensive data collection from multiple reputable sources and a rigorous data-cleaning process. We remove inconsistencies, verify that each solution is functionally sound, and ensure every code submission is properly validated. This meticulous approach guarantees that the dataset meets the highest standards of quality and reliability.

For those interested in a deeper dive into the technical aspects of our cleaning and verification process—including how we leverage Docker containers for automated code execution—please refer to our detailed blog post: Preparing Code Eval Datasets: Data Cleaning and Automated Code Execution for Advent of Code with Docker and Python. This document outlines the architecture of our system, which uses language mappings to select the appropriate Docker images, processes input files dynamically, captures execution outputs, and stores the results efficiently.

Verification Through Test Cases

Each problem in the Advent of Code ECV Dataset is accompanied by three distinct test cases. These are organized in a well-structured file system that mirrors the challenge’s narrative. For example:

test_cases/

├── 2024/

│ ├── ori_prompt/

│ │ ├── day1_part1.txt

│ │ ├── day1_part2.txt

│ │ └── ...

│ ├── test_case1/

│ │ ├── answers.csv

│ │ ├── day_1_input.txt

│ │ └── ...

│ └── test_case2/

│ ├── answers.csv

│ ├── day_1_input.txt

│ └── ...

This structure supports reproducibility and aids in the fine-tuning of AI models by providing multiple validation points for each solution.

Getting Started: Integrating the Dataset into Your Workflow

Integrating the Advent of Code ECV Dataset into your project is straightforward. Here’s how you can download and start using it with Python:

from huggingface_hub import hf_hub_download

import pandas as pd

REPO_ID = "Supa-AI/advent_of_code_ecv_dataset"

FILENAME = "aoc.csv"

# Download the dataset file from Hugging Face and load it into a DataFrame

dataset_path = hf_hub_download(repo_id=REPO_ID, filename=FILENAME, repo_type="dataset")

dataset = pd.read_csv(dataset_path)

print(dataset.head())

This code snippet downloads the dataset directly from the Hugging Face repository and loads it into a Pandas DataFrame, ready for analysis and integration into your AI training pipelines.

Looking Ahead: Future Developments and Community Contributions

SUPA is committed to continuous improvement. Future releases will expand the dataset to include more languages and challenges from additional years. We invite the community to contribute by submitting solutions or suggesting improvements. If you have a contribution, please email us at developers@supahands.com.

Human Domain Expertise – Let’s Shape the Future of AI Together

If your team is looking for help to curate data from experienced software engineers, product designers, or even specialized PhDs, we’re here to help.

We have a robust network of experts whose real-world experience can enrich your AI models. Whether it’s refining model outputs or creating more human-centric training data, these subject matter experts bring invaluable insights that can bridge the gap between automated systems and nuanced human intelligence.

If your AI team needs expert collaboration to help with your projects, reach out to us at isaac@supa.so or our website.